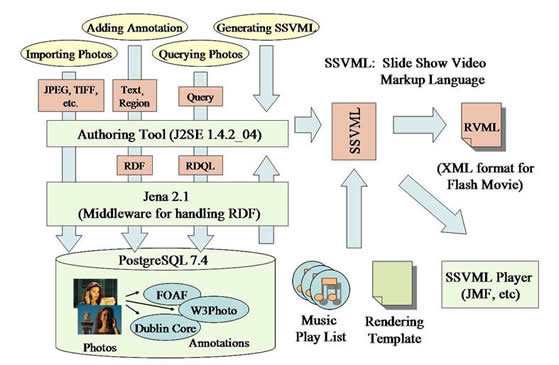

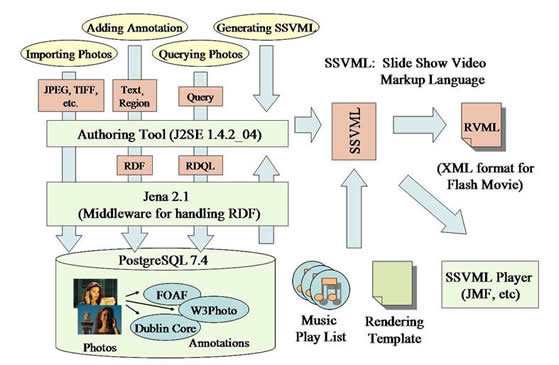

Figure 1 Overall architecture of authoring tool

Providing good care to dementia patients is becoming an important issue as the size of the elderly population increases. One of the main problems in providing care to dementia patients is that the care must be constantly provided without interruption, which places a great burden on caregivers (often the patient's family members). One approach to lessening the burden of the caregivers is to present an engaging stimulus to the patient that focuses his or her attention. One of the promising stimuli is the so-called 'Reminiscence Video', which is a slide show video produced from the patient's old photo albums. The effects of showing a reminiscence video have been demonstrated by experiment [1].

Making a reminiscence video, however, is not an easy task, and it involves many steps. First, the photo albums are collected from the patient's family. Next, the photos are selected from the photo albums. The photos to be used should evoke distant memories of the patient. Then, the photos are shot with a video camera, often using panning and zooming effects (the so-called 'Ken-Burns effects'). Finally, narration is added, typically with the aim of getting the patient more involved in the video. These processes are usually performed by skillful volunteers, and they are not the kinds of tasks the family caregivers can perform while looking after the patients. Moreover, for some patients, the impact of a video's first showing may wear off after presenting the same reminiscence video again and again. Thus, there is demand for an easy method of making reminiscence videos from the same set of photos.

In order to meet this demand, we have developed an authoring tool that makes use of photo annotations. The annotation attached to a photo includes the theme of the photo, such as 'travel' or 'school', and the date the photo was taken. In addition, a particular 'region' in the photo can be specified with annotations. A region could represent, for example, the area of a person's face or an interesting object in the photo. These regions are used when panning and zooming effects (Ken Burns effects) are added in the final video. This technique is popular when making an attractive video from a set of still photos. The proposed authoring tool also contains a database that stores the scanned photos taken from the patient's album. The annotations to the photos are made with the authoring tool. Once we have the annotated photos in the database, we can produce a video as follows. First, we search for the photos to be used in the video by using the annotated data attached to each photo as search keys. Then, the panning and zooming effects are added semi-automatically, making use of the region data in the photo annotation. Finally, background music can be specified to form a play list.

It is rather time consuming to add annotations to each photo. However, once we have annotations, we can create various kinds of video from an existing set of photos. This is very useful, especially when a patient gets bored after viewing the video only once. Photo annotations are mainly used for efficient image retrieval from the database (for example, [2]). In the proposed authoring tool, however, annotations are used not only for image retrieval but also used for adding suitable visual effects to a reminiscence video.

Figure 1 shows the overall architecture of the authoring tool. As mentioned above, the photos are stored in the database with their annotations. The database used is PostgreSQL 7.4. The annotations are added with the authoring tool, which is written in Java (J2SE 1.4.2_04). The annotation is represented and stored in the RDF format, and we used the Jena (2.1) [3] tool to handle RDF data. Three vocabularies were adopted to describe the annotations: Dublin Core [4], FOAF [5], and W3Photo [6]. Dublin Core is used to describe various properties of the photo itself. FOAF is mainly used to describe a person appearing in the photo. W3Photo is used for storing information on the region data of each photograph.

Figure 1 Overall architecture of authoring tool

The user (video creator) selects the photos for the video by specifying the keywords that describe the 'theme' of the reminiscence video he or she wants to produce. The query can contain regular expressions supported in RDQL [7]. A template specifies the kinds of effects and transitions applied between the photos. We implemented a simple template that applies a zooming effect to a particular region, panning effects between regions, and fade-in and fade-out transitions. In addition, BGM can be added as a play list. We defined SSVML (Slide Show Video Markup Language) as a compact representation of a slide show video. By using SSVML, it is possible to specify the photos, effects and transitions, and the order of photos to be used. The authoring tool first outputs the video description in the SSVML format. Then, it is converted into RVML (Rich Vector Markup Language) [8] so that it can finally be converted into a Flash movie (SWF file). We used a freely available tool from Kinetic Fusion [9] to convert the RVML format into the SWF format. Alternatively, we are also implementing player software that directly interprets the SSVML format.

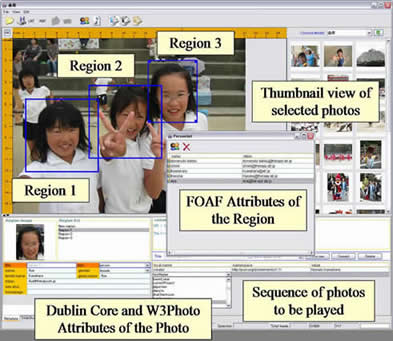

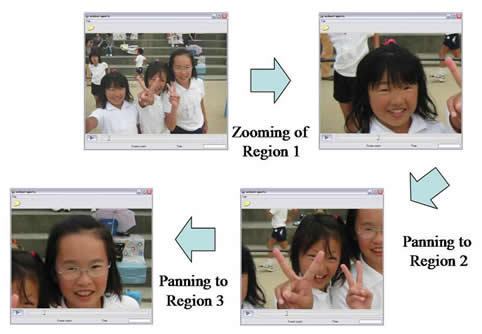

Let us show a GUI example of annotating photos (Figure 2) and some snapshots of the video produced by our system (Figure 3). In Figure 2, three regions are defined, and we can specify FOAF attributes for each region via Text Annotation Dialogs. In the automatically generated movie, Region 1 is zoomed, and the displayed area moves toward Region 2. Finally, Region 3 is displayed, as shown for this photograph.

Figure 2 GUI example of annotating photos

Figure 3 Snapshots of generated video

We introduced a reminiscence video authoring tool that uses photo annotations. An average user (who may not have know-how of video editing) can select photos based on the intended theme of the video to be produced and create a movie by using a pre-defined template. In the current implementation, we defined only a very simple template. We plan to add various kinds of templates. In addition, we also plan to add a function to easily add narration to the video by attaching sound annotation to each photo. In the current implementation, a user is required to add each annotation (such as regions in a photo) manually. There are several research efforts being carried out on (semi-) automatic annotation of images. We plan to utilize existing techniques such as face detection algorithms to help users specify regions.

Advanced Telecommunications Research Institute International (ATR) holds the copyright of the authoring tool itself, which is built on top of various software as described here.

We would like to thank Koji Saito for the design and implementation of the authoring tool. This research was supported by the National Institute of Information and Communications Technology.

[1] : Reminiscence Video for Higher Brain Dysfunctions, Proceedings of General Conference of Japan Society for Higher Brain Dysfunctions, (2004)

[2] : Ontology-based photo annotation, IEEE Intelligent Systems, Vol. 16, No. 3, (2001) 66-74.

[3] http://jena.sourceforge.net/

[5] http://www.foaf-project.org/

[7] http://www.w3.org/Submission/RDQL

[8] http://www.kinesissoftware.com/products/KineticFusion/rvmloverview.html